The P&L attribution test - going, going, gone?

The P&L attribution test (PLAT) is one of the most hotly debated topics in FRTB with industry experts doubtful whether the test can ever be made to work[1]. In this article we review the goal of the test, summarise our empirical findings and speculate on what the future holds for PLAT.

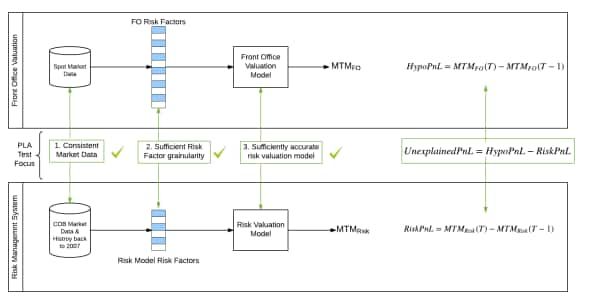

In outline, PLAT assesses the difference between the P&L calculated by the front office and the P&L calculated in risk. If this difference - the unexplained P&L - is too big or too variable, then a breach is counted. Four breaches within any 12-month period will force the trading desk onto the Standardised Approach (SA) with a capital impact of up to six times[2].

Getting the unexplained P&L down to an acceptable level requires aligning a number of components of the calculation as illustrated in Figure 1.

Figure 1: Schematic of the PLAT

Aligning market data can be a headache for banks, but it is achievable by using the same market data to fuel front office calculations as the risk ones. The area that our clients have been most interested in therefore is the second two components: risk factor and valuation model alignment.

We have run the PLAT on real portfolios exploring how different risk factor grain, desk structures and valuation models impact the result. The following high level observations have emerged:

1. Linear directional portfolios can be represented by simple Taylor expansion risk models and pass PLAT. This is good news for banks with simple portfolios as it means that they don't necessarily need to invest in expensive risk model upgrades.

2. Exotics typically fail PLAT unless the risk model and front office model are identical. This may justify risk model upgrades but only if the cost of the upgrade is less than the capital saving from using the Internal Model Approach compared to the Standardised Approach.

3. Adding linear directional portfolios to exotic portfolios can result in an overall PLAT pass. This may lead to a consolidation of regulatory desks.

4. The PLAT is relatively insensitive to the granularity of risk factors (e.g. the number of points on an IR curve) so the risk factors can be tuned to pass PLAT whilst minimising the non-modellable risk factor (NMRF) capital charge. The PLAT-NMRF conundrum[3] is an area that we have been researching in detail. You can read our white paper on the topic here.

The third observation is also made from a theoretical point of view in an article in Risk[4] where the authors represent both the hypothetical and unexplained P&L for an individual instrument as a normal distribution. This gives a model for the probability of passing PLAT at a desk level which the authors use to demonstrate that increasing the average correlation between trades in the hypothetical P&L (making the desk more directional) increases the likelihood of passing.

The same paper demonstrates that maintaining a PLAT pass for a given desk over a sustained period will be difficult because of flaws inherent in the test ratios. This could be the final nail in the coffin for the PLAT in its current form.

So what now? Despite the troublesome ratios, the principle behind the PLAT was widely welcomed by the industry and it is unlikely that the test will be dropped entirely. The European Banking Authority has recently stated that it intends to reboot the test in the Regulatory Technical Standard supporting the Capital Requirements Regulation (CRR II)[5].

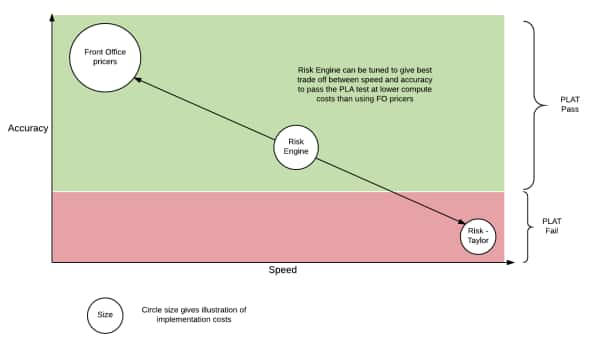

A test that remains very sensitive to the size of the unexplained P&L may push banks down the path of overhauling their risk systems to use front office pricers at great cost. But is this the only option? Ideally a risk model would sit somewhere between the accuracy of a front office pricer and with the speed of a market risk approximation (e.g. Taylor expansion or discrete valuation grids approaches). This is illustrated in Figure 2.

Figure 2: Ideal behaviour of risk engine

For risk applications, full front office pricing accuracy is not required because the goal is to generate a loss distribution rather than beat a competitor to a transaction price. Therefore, a certain level of unexpected P&L is tolerable as it simplifies the risk system and reduces hardware costs.

Under FRTB, the number of scenarios used to price trades has increased by an order of magnitude compared to Basel 2.5[6]. Consequently, front office pricers designed for accuracy but not performance may never be able to calculate FRTB capital within an overnight batch. Techniques that build a risk model by fitting a surface to a limited set of valuations generated from a front office model may offer the optimal balance between accuracy and speed.

Reaching this middle ground of sufficient accuracy at high speed (and so low hardware costs) is an area of research for IHS Markit and we'll give further details in future posts.

[1]Risk Magazine: P&L test in Europe's FRTB may not work - research, 18 April 2017

[2]Risk Magazine: The P&L attribution mess, 2 August 2016

[3]Risk Magazine: Inconsistent FRTB model advice vexes dealers, 16 February 2017

[4]The P&L Attribution test, Peter Thompson, Hayden Luo, Kevin Fergusson, 13 January 2017

[5]Risk Magazine: EBA plans reboot of FRTB's P&L test, 31 March 2017

[6]McKinsey Working Papers on Corporate & Investment Banking No.11

Tel: +44 20 7260 2195

stuart.nield@ihsmarkit.com

S&P Global provides industry-leading data, software and technology platforms and managed services to tackle some of the most difficult challenges in financial markets. We help our customers better understand complicated markets, reduce risk, operate more efficiently and comply with financial regulation.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.